From Traditional Data Lakehouse to Data Intelligence Platform: How Databricks Is Becoming the Operating System for AI

In the last couple of years, we have witnessed a wave of transformation in traditional data warehouses and transactional databases. This has led to the emergence of the first generation of data lakehouses with cloud-enabled value chains and ecosystems. Industry projections indicate that nearly 67% of organizations will adopt the lakehouse architecture as their primary analytics platform over the next few years.

These data ecosystems solve basic storage issues by introducing unified storage as a single source of truth and eliminating the silos between structured data warehouses and unstructured data lakes. Lakehouses adopt open formats such as Parquet and Delta Lake, enforce schemas to ensure that data fed into a model is of high quality, and provide a basic level of quality control that data warehouses lacked. However, even with these benefits, data lakehouses, due to their slow-moving architectures, often become the primary source for most GenAI projects that fail to reach production.

The Paradox of Benefits: How These ‘Benefits’ Lead to Failure in GenAI and Agentic AI Proofs of Concept (POCs)

Data lakehouses offer several core benefits, including enabling AI with different engines such as PyTorch, TensorFlow, or Mosaic AI without moving the data, and providing a single place to fetch historical training data. Still, traditional lakehouse architectures are becoming the primary reason GenAI projects and POCs fail because:

Data swamps disguised as ‘single sources of truth’

Lakehouses make it easy to ingest data; as a result, enterprises tend to accumulate far more data than they actively curate. Over time, the ‘single source of truth’ turns into a swamp of outdated, duplicate, or conflicting content. While experimentation works with curated samples, production agents pull data from the broader lake, retrieve inconsistent context, and generate confident but incorrect responses.

Static governance in a dynamic AI world

Traditional governance models stop at datasets and tables. They are not designed to govern Large Language Model (LLM) outputs or control what context an AI system can retrieve at runtime. GenAI POCs often succeed because they run under privileged developer access. At scale, however, the platform fails to enforce identity-aware, policy-driven retrieval, allowing AI systems to access more information than what individual users are authorized to see.

Batch-oriented pipelines versus real-time intelligence

Lakehouses are optimized for historic data. This works for reporting and business intelligence (BI), but breaks down when AI systems are expected to answer questions about the current state, such as live inventory, recent transactions, or operational events. Agents require near-real-time data refreshes – not scheduled batch updates – to enable informed decisions.

The metadata gap

AI and GenAI applications need the semantic context of the data, not just the data itself. Traditional lakehouses store information efficiently but lack business meaning, relationships, and intent. As a result, the context needs to be encoded manually for every use case, making solutions fragile and difficult to scale.

Data Intelligence Layer: Evolving from Lakehouse to AI Platform

The Evolution from Data Lake → Lakehouse → AI/Data Intelligence Platform

A traditional data lakehouse is passive, whereas enterprise AI requires an active system. Before getting started with the data intelligence layer, we must understand that it is not another tool integrated into the data platform. It must address:

- How the data swamp can be transformed from stored data to usable intelligence by evaluating it based on usage, trust, and impact, not just existence, because intelligence is knowing what matters, not storing more.

- How to enable static data governance into ‘Governance as a Runtime Control Plane’ to operate AI and agents with guardrails, policy-aware context, and autonomy without chaos.

- How the data intelligence layer will enable real-time AI.

- How to fill the metadata gap to enable the transition from passive metadata to active intelligence, as it guides behavior. If metadata can’t influence decisions, it’s just documentation.

How Does Databricks’ Data Intelligence Platform Address Traditional Data Lakehouse Challenges?

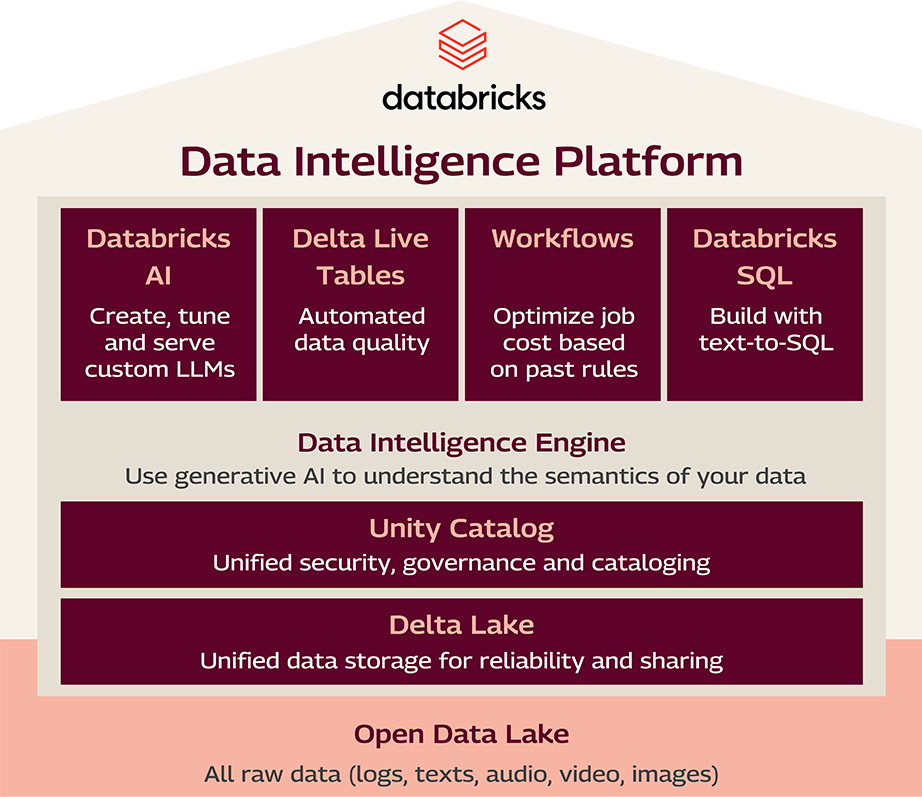

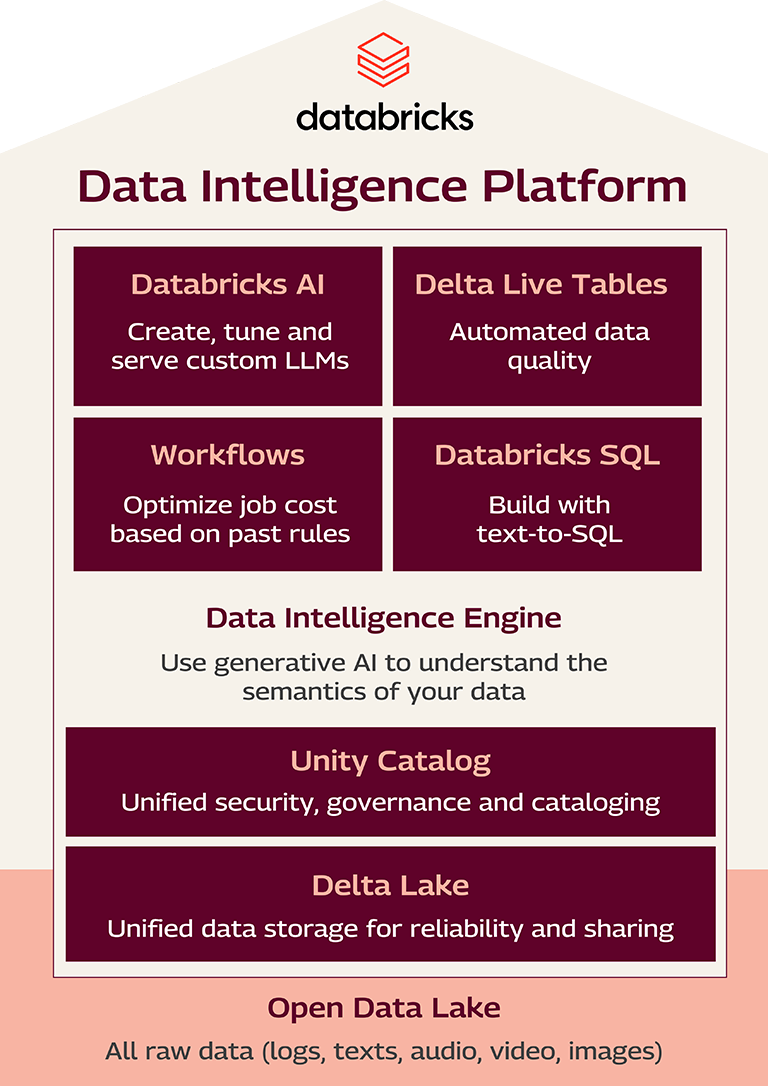

The data intelligence platform by Databricks continuously transforms raw data into trusted, governed, cost-aware decisions by combining information, semantics, governance, machine learning (ML), and agents into a single, unified system.

Databricks is evolving from a traditional lakehouse that stores data into a data intelligence platform that governs meaning, controls decisions, and enables safe autonomy at scale. As the platform has evolved, Databricks is described as an operating system for AI, providing a shared, governed, and intelligent foundation on which all enterprise AI systems run, reason, and make decisions. However, it doesn’t replace user interfaces, business applications, LLM providers, or workflow tools.

An Architect’s View on How Databricks’ Data Intelligence Platform Controls AI

The data intelligence platform fixes the traditional lakehouse by adding meaning, control, and decision intelligence and turning stored data into governed, real-time, AI-ready knowledge.

Figure 1 illustrates how Databricks unifies raw data into a single platform, where AI, pipelines, and governance operate together in real time

The platform’s functions provide shared memory, runtime governance, execution coordination, observability, and cost control for all AI workloads built on enterprise data. In Databricks, the data intelligence layer is emerging across:

Unity Catalog, which tracks who uses what from which workload, enables lineage connections that link the source to the feature, then to the model, and finally to the decision, while also facilitating AI-powered information discovery with downstream impact. Unity Catalog also enables semantic understanding of data by filling metadata gaps through metadata tagging.

Unity Catalog enforces fine-grained access during query and model execution. Row and column-level policies dynamically apply to SQL queries, feature access, model inference, and governance as data flows through AI workflows. The Unity Catalog is the layer where the platform begins to behave like an AI operating system.

Delta Live Tables support incremental handling of late, evolving, imperfect data and event-driven ingestion from pipelines to signal flow, ensuring AI is only as intelligent as its freshest signals and that the downstream system receives the latest data to enable informed decisions by AI or agents.

Delta Lake addresses data swamp challenges by enabling a unified, trusted source of truth — with one version of facts across BI, ML, and agents — supported by immutable, versioned data.

Data Intelligence Engine turns data into knowledge. This is the layer that enables generative AI to understand the semantics by using AI to analyze datasets, infer relationships, assist discovery, bridge human intent and machine data, and fill the metadata gap.

Final Verdict: AI Needs Systems, Not Tools

Conventional lakehouses are developed to store, process, and analyze information. As organizations transition from relying on analytics-driven insights to implementing GenAI and agentic AI systems, traditional frameworks are reaching their limits, including data swamps, static data governance, batch-oriented pipelines, and metadata gaps with passive details.

This is where Databricks is progressing toward a data intelligence platform that not only manages data but also enhances capabilities beyond mere storage and computing, including semantic comprehension, real-time governance, visibility, and integrated feedback mechanisms.

Together with services such as Databricks AI, Delta Live Tables, Workflows, SQL, and Databricks, the platform enables real-time, cost-aware, governed intelligence for both humans and agents, turning the traditional lakehouse into a system-level foundation for enterprise AI.

References

- Yellowbrick. (n.d.). The lakehouse leap: How 67% of organizations are redefining data strategy. Retrieved fromhttps://yellowbrick.com/blog/data-platform/the-lakehouse-leap-how-67-of-organizations-are-redefining-data-strategy/

Praveen is a seasoned thought leader with more than 2 decades of experience, working at the intersection of enterprise data platforms, AI architecture, and operating models, helping organizations move from analytics-centric data estates to AI-driven decision systems.

Read MorePraveen is a seasoned thought leader with more than 2 decades of experience, working at the intersection of enterprise data platforms, AI architecture, and operating models, helping organizations move from analytics-centric data estates to AI-driven decision systems.

Over the years, he has been involved in designing and advising on large-scale data, analytics, and AI platforms across cloud and hybrid environments, with a strong focus on lakehouse architectures, governance frameworks, and enterprise-grade AI enablement. Much of his work has involved bridging the gap between data engineering, platform architecture, and AI adoption, especially as organizations transition from traditional BI and ML use cases to generative AI and agent-based systems.

Read Less